"Hitting ‘Deploy’ always brings that little jolt of adrenaline—whether you're fresh out of school or a 15-year pro, it’s just part of the ride. But with experience, both home runs and disasters included, you learn that having a solid checklist changes everything. Instead of crossing your fingers and hoping for the best, you’re taking control, cutting out most of the potential headaches and those dreaded late-night alerts. Let’s dive into the essentials that make launching code feel more like a win than a gamble. Here is my checklist - So let’s dive right in ⚡️

1. Establish a strong foundation with Testing 🧢

Errors often creep into our code due to logical flaws or unhandled cases. Building a system with automated testing ensures our code behaves as expected and safeguards against future changes.

- Automated Testing: Implement automated test cases for every core functionality. We have lots of tools across languages and frameworks that help with this like RSpec (Ruby), JUnit (Java), AndroidX Test (Android), Jest (JavaScript) etc.

- Pipelines for Test Validations: Set up CI/CD pipelines that validate these tests before committing the code. This ensures that no code breaks existing functionality.

At Apollo, Ruby’s Rspec is the backbone of testing. Maintaining a hierarchy ensures that each file is a representation of a test file and that each piece of code written can be automated. Robust CI/CD pipelines ensure these tests validate any new code that goes into production.

It is equally important to be mindful of what we are writing and covering those in TCs.

Example: Let’s say you want to validate if a string is a palindrome. Consider edge cases like:

"Aisia"(case sensitivity)"aisia "(whitespace) Without proper handling, these can lead to unexpected results.

There are other flavors of testing that we should be aware of

- UX Testing to check if our code is disrupting any existing user experiences.

- Integration Testing to ensure our code can handle expected responses and errors from third-party services or other independent components.

- Functional Testing to verify that our code performs its intended functions accurately.

- Load/Stress Testing to confirm that our code can scale as needed.

- Access/Authorization/Authentication Checks to ensure only users with the correct permissions and access are allowed.

2: Handle Edge Cases Proactively 🥢

Edge cases often get overlooked in the rush to meet deadlines, but they deserve dedicated time and attention. Taking that extra few hours upfront not only helps identify tricky scenarios but also gives us a clear understanding of the limitations in our system.

- Identify and Document Edge Cases: As you write code, think of all possible variations in inputs, especially those that deviate from the expected norm.

- Use Logging and Alerts: Implement logs and alerts to monitor unusual inputs or scenarios. This helps capture data on unanticipated behavior and address them promptly.

Long back, while investigating an issue with inconsistent data between what Apollo sent and what is reflected in the CRM of the customers, we identified an edge case while testing which were never caught or logged before leading to an inconsistent state that the users were never aware of. By handling these errors correctly and surfacing to the right teams and stakeholders, we were able to capture meaningful insights from it, making our system better and more reliable.

3: Manage Third-Party Dependencies 🧩

When our systems and services rely on third-party APIs and integrations, there is always a scope for failure. We should ensure having prevention mechanisms against single-point-of-failures

- Identify which sort of errors we would go on to retry and what the exit criteria is

- Ensure the services that interact with that third-party system have a backup and are retraceable

A few months back, we released a closed beta of Webhooks where the core job was to send out JSON-based notifications to a webhook url when an event was triggered as configured by the users. This required us to make use of Mechanize , a ruby library for web interactions. In case of failure of this service or network interactions, the system ensures for retries and we are able to handle the retriable errors gracefully, logging and storing all the information points we need to traceback at any step and reconstruct the notification to be sent in case of a full failure.

4: Centralize Constants🍀

In many common cases, we need to write code that follows some constraints like numerical values which we hard-code as limits in our code. And if there isn't a "single source of truth" for the same, we may end up creating a huge number of duplicates. Due to this, if we miss out on modifying at one place and do so in another, we may break the functionality completely having downstream impact as well. This is also true for constants shared between the frontend and backend.

Our bulk-select option allows the users to select up to 50K records at a go on certain searcher pages. However, this selection limit is capped and governed by the tier of the team. If frontend allows a selection of 10K records to a team according to their plan, and the backend does not use the same variable/function, this API can be potentially abused by making direct hits to the server. To triage this, we have middleware set up to validate each request received within the app and prevent misuse.

5: Normalize Code Across Components 🛠️

When similar code snippets are scattered across different components expected to have the same functionality, it becomes challenging to maintain consistency. It is important to normalize shared logic into common functions or libraries and ensure that these serve as a single source of truth.

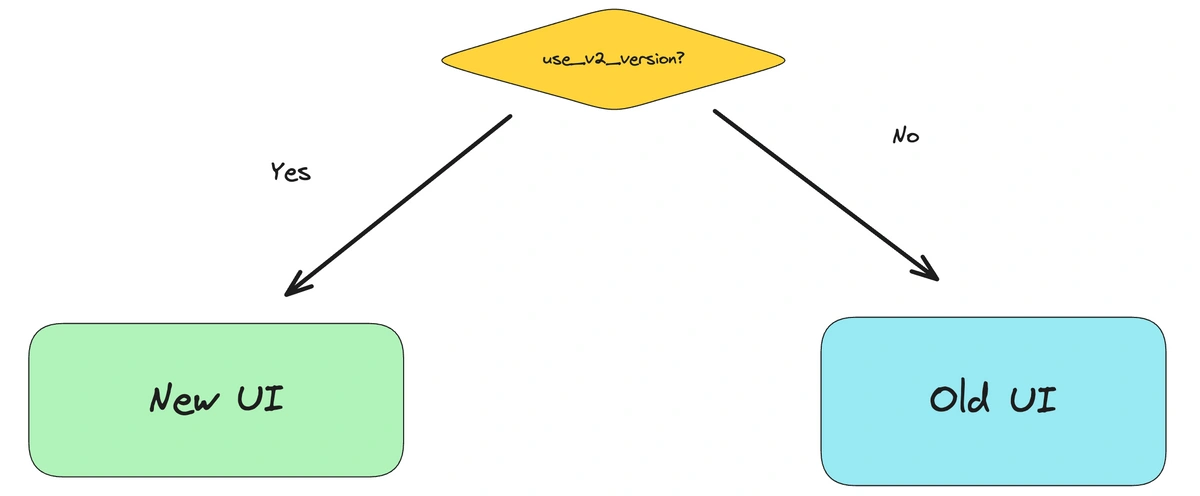

A very clear example of the same is when we come to migrate from one version to another or run experiments on different user experiences

The frontend and backend systems may carry a different definition of when to use the new version! If that is the case, things can get out of control and your backend may return the data that your frontend has no idea how to support.

For example-

# determines whether or not to use v2 version of the feature

def use_v2_version?(user)

user.present? &&

user.supports_new_ui? &&

user.is_reading_this_article &&

Date.today.wday.between?(1, 5)

end

At Apollo, we store the isolated flags at a team-level configuration which are used by both the frontend and backend to power the main website.

6: Isolate Functional Dependencies ☠️

If our function depends on others, it’s crucial to handle errors that may originate from these dependent functions. An important thing to note is that failure of the other components should not hamper the execution of the current component.

def perfom_something_else

# faulty function that raises error

end

def perform

# for all the requests that have status = received

# requests.update_all(status = in_progress)

# a huge CPU / IO / Network intensive operation

perform_something_else

end

For example in the above case, errors raised by perfom_something_else can potentially have the following impacts if not handled properly

- Any operations or computations done before

perfom_something_elsewould be wasted if not captured correctly - Potentially make the states of the system inconsistent as the requests are marked to be in-progress but were never completed

- Potentially reach an intermediary state where we can not pick up those requests again because the condition of status = received is not satisfied anymore

To tackle this, we should evaluate fallback mechanisms and design the system such that it is able to reach a terminal and acceptable state.

Example implementation

def perform

# for all the requests that have status = received

# requests.update_all(status = in_progress)

# a huge CPU / IO / Network intensive operation

begin

perform_something_else

rescue => e

# log and trace error

# handle terminal states and recover

# requests.update_all(status = received)

end

endFor enriching our data using CSV Enrichment, in the background, we run several processes and one of them is to get the email of the requested person. In case of any errors or any delays due to which we are unable to find the email of the requested person, we defer the parent request of enriching the CSV by a few seconds to let the child email service recover and give us a better probability of getting the email without wasting any resources into timing out or retrying the parent CSV enrichment job.

7: The strings of the code 🧵

Strings are the most notorious code components we can have. The kind of errors, messages or warnings we show to the user are all powered by the strings. There is a huge potential for gaps being there in the consistency of the language of the string and the content that we use across our code impacting user experience.

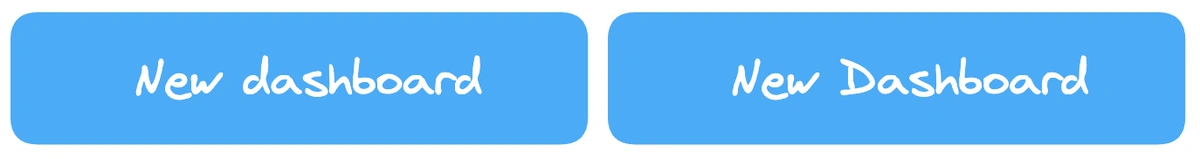

Can you spot the difference between these two buttons?

Even though they look the same and intend to convey the same information, if written across code, we may not get a consistent result.

If we had a single source that tracked this, it would be easier, more concise, and reusable. We just have to find the right fit here as maintaining the keys for strings and avoiding duplicity is a caveat but would be helpful in the long run.

Inconsistencies are also spawned between Backend <> Frontend interactions. For example, the same above API powers two different frontend components. In the first one, the error displayed is as is and rendered from the backend. And in the second, there is some sort of transformation of the same on the frontend. A way to solve this is by using mappable codes and letting the frontend map their error or success messages from those codes as a practice.

Now here comes the magic of storing the strings in a mapped way✨

After serving customers who understand English, what if you want to build for more than one language? All you would have to do is translate your Strings file to the corresponding strings file in the other languages and parse your strings such that your app uses different locale (language) of the string, and tada 🎉, you have an app that is multi-lingual.

For example, the Android framework enforces specific lints and has tiny little warnings popping in whenever we try to assign a bare raw string that is not defined in our strings.xml file. This ensures that every string is mapped to a standard message. This is not only true for strings but other resources such as size definitions (16dp width) or style resources or colors. All these resources are wrapped up neatly in separate XML files for consumption.

8: Spy, Benchmark & Profile 🔍

Let's look at this code to understand it:

enrichments = # an array of enrichments on different contacts

enrichment_contacts = enrichments.map { | enrichment| enrichment.contact }

Now, .contact could be anything - a field, an embedded object, or even an associated model. On each such interaction, we should ask the following-

- How many DB queries would it trigger in each of this?

- How many documents will it scan to render that result?

- How many documents will it return on a single network call?

Taking contact to be an associated Mongo model, each enrichment.contact the call will hit the database. Therefore, for N enrichments, we would end up hitting DB N times potentially making our execution slower. We can solve this problem by making a single bulk call instead of N separate ones. To triage this, before deployment to production, we should check how many database calls this line of code does, what index it uses, how many documents it scans, and so on. We can set up profilers for the same that spit out the raw verbose log queries to determine where we are losing on efficiencies and run .explain on the raw Mongo Queries to analyze the execution stats, number of documents scanned, the index used etc.

Apart from hits to DB, it is important to analyze the time taken on crucial services and steps. For this, we use the Benchmark ruby gem to profile on the time taken for the execution of the component. This is extremely helpful in analyzing the time taken on different steps of execution and echoes where we need improvement. We can also set up benchmarking for memory allocated on sensitive operations.

There are other super useful tools like Vernier, which give a breakdown of the execution time and what native or external functions took how much time in a sampled way. NewRelic systems in place help to profile the components contributing to the total execution time of an API call aiding in building smoother and faster user experiences. For example, a couple of months ago, we observed that the time to index a document on Elastic Search was pretty significant. On analyzing the indexing call from Vernier, we were able to find that a native .split function somewhere was using around 10% of the time which we replaced to decrease this time.

9: Monitor and Learn 📖

Even with the best practices in place, errors can and will occur. The key is

💭 Know how to find them

💭 What to do with them

💭 Go on to fix them

Implementing basic observability will help us in identifying a lot of things like where code breaks and why - which service is having a higher latency than expected, identifying cases of transient errors, systems or resources we need to have to scale given the current configurations and workload. While revamping our CRM Dashboard, we invested efforts into ensuring that the current Elasticseearch cluster configuration was able to handle the current and future search traffic and storage. One of the most important key insight into helping us get to the right decisions was to observe the load we were getting from all the sources using some customised metrics and identifying bottlenecks.

This checklist is far from exhaustive. I have tried to cover some of the common pitfalls I faced and the ideas we have in place for solving those :)

🚀 .....btw, we are hiring!

At Apollo, we specialize in solving complex software challenges at scale. Whether you're dealing with high-volume data, intricate integrations, or systems that need to perform under pressure, we’ve got the expertise to architect robust, scalable solutions. Our engineering team thrives on solving complex problems, pushing the boundaries of what’s possible with data, and delivering cutting-edge solutions that drive "impact".

We are looking for smart engineers like you to join our "fully remote, globally distributed" team. Click here to apply now!