Introduction

Have you ever asked, “why aren’t my emails reaching my recipients’ mailboxes?”. If so, you’ve come to the right place.

Email deliverability is a complex and expansive topic, spanning domain setup and authentication configuration, mailbox setup, warmup & ramp up, metrics tracking and analysis, and much more. From the technically savvy power-users trying to maximize their ROI to a user new to the space just trying to get their outbound pipeline up and running, deliverability poses a huge challenge. The traditional process to solve deliverability problems involves a combination of research, support inquiries, and sometimes engineering support which can take days or weeks to resolve. Our solution to disrupt this flow - a real-time AI-powered deliverability agent integrated into our AI-assistant ecosystem, combining systematic analysis with domain expertise to help users fix their email deliverability issues in minutes.

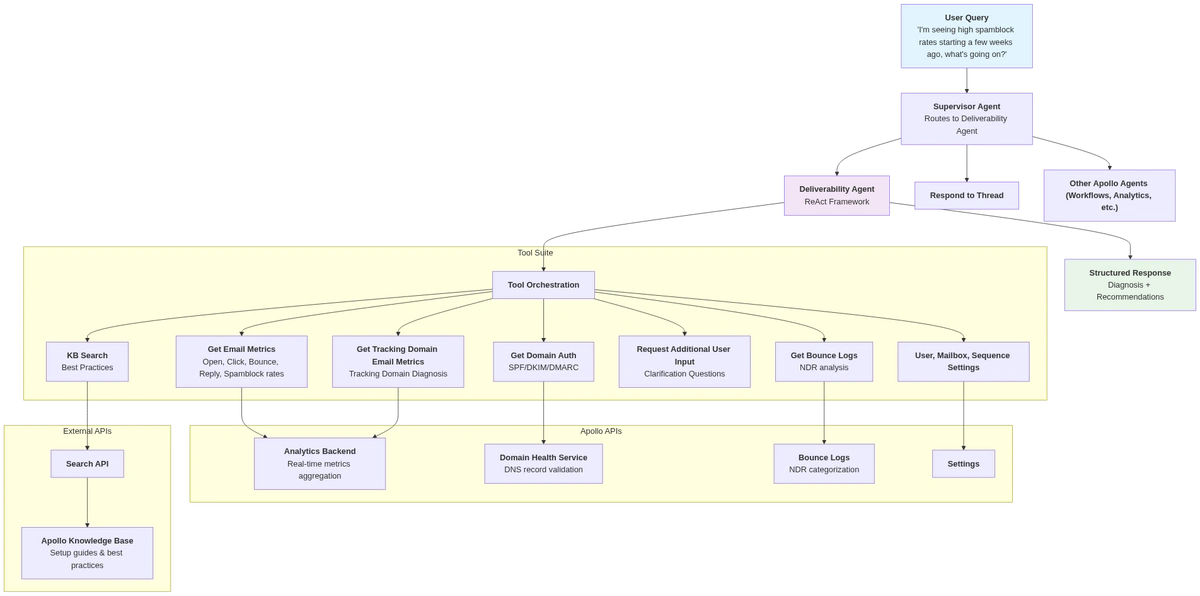

System Architecture

- Supervisor Agent: This is part of the AI Assistant agent, routing deliverability queries to the deliverability sub-agent while routing other types of queries to other appropriate sub-agents.

- Deliverability Agent: The bread and butter of deliverability analysis. Orchestrates multiple tool-calls and composes results into well-structured response to the user

- Knowledge Base Integration: Search API + Apollo.io documentation

- Apollo APIs: Enables analytics, email diagnosis, account information retrieval, and more.

- External APIs: Enables Knowledge Base (KB) search use case

The agent, written using the ReACT framework (https://www.ibm.com/think/topics/react-agent), can be thought of as a “thinking” model that continuously iterates on its current state and decides what to do next. It has a system prompt that guides it on how to orchestrate the process of responding to a user query. At a high level, here’s what the system prompt looks like

- Has the user provided me enough information to answer the question, or do I need to clarify vague elements of their question?

- What additional data do I need in order to successfully respond to the user? This evaluation, and data-fetching, can be repeated multiple times.

- Use a combination of all inherent knowledge available to me, data retrieved from the user’s account, and KB knowledge to answer the user’s question in a detailed and concise manner.

While these high-level blocks appear straightforward, the agent performs complex operations behind the scenes. It comprehends how various metrics relate to each other and what they indicate about email performance. The agent conducts its analysis methodically, following a process similar to what a human engineer would use to investigate the same issue. Despite this sophisticated reasoning capability, the code framework implementing these functions is surprisingly simple, as shown below.

class DeliverabilityAgent:

def __init__(

self,

thread_id: str,

assistant_ui_interface: AssistantUIInterface = None,

model: str,

contexts: List[ApplicationContext] = None,

):

super().__init__(name="deliverability_agent_react")

self.llm = ApolloChat(

model=model,

temperature=DEFAULT_TEMPERATURE,

timeout=60,

max_retries=3

)

# All available tools for the REACT agent

self.available_tools = [

get_user_settings_tool,

get_mailbox_settings_tool,

request_user_input_tool,

get_authentication_status,

get_email_metrics,

get_tracking_domain_metrics,

get_bounce_logs,

get_verified_email_metrics,

get_campaign_metric,

deliverability_kb_search_tool,

]

def get_agent_graph(self, checkpointer: BaseCheckpointSaver) -> CompiledStateGraph:

"""

Creates the ReAct agent using langgraph's prebuilt create_react_agent.

"""

return create_react_agent(

model=self.llm,

tools=self.available_tools,

prompt=self.create_system_message(),

state_schema=DeliverabilityAgentState,

checkpointer=checkpointer,

name=self.name,

)

While the agent code is relatively simple, the complexity lies in the prompt engineering. We’ll cover that in the challenges faced section. But before we reach there, how do you even know what prompts to change? That’s where Evals come in.

Evaluation

We’ve written an agent, great! Now, how do we know it works and solves for all the use-cases we want it to solve for? More importantly, how do we ensure that it gives the correct output? The answer: LLM Evals.

An eval is an end-to-end test that verifies the Agent responds to user queries consistently, makes all the expected tool calls, and avoids unnecessary ones. Let's examine a real eval example to understand how this works.

The screenshot demonstrates that the LLM successfully made all expected tool calls with the correct parameters. The final agent response on the right side is semantically similar to the expected response on the left side.

Each use case we address has a corresponding eval. This ensures that while we enhance the agent, both new and existing functionalities work as expected.

You can implement eval libraries using external tools (such as LangSmith or PromptLayer), or develop your own library - as we did - for more precise control.

Challenges Faced & Lessons Learnt

We faced two major challenges as we built this agent: 1) Building a scalable system prompt, which enables the agent to make its own decisions effectively, and 2) Giving the agent a high-quality and up-to-date knowledge base.

System Prompt Engineering

After repeated failure and iteration cycles where the agent just doesn’t quite grasp what different pieces of data mean, or how to analyze results in a consistent way, the biggest insight we found was this - the more detailed and concise your prompt and tool descriptions, the better the agent performs. Think of the agent as an intern - it knows nothing about your product or how your company views and debugs problems in its own unique way.

Just like you have an onboarding program for interns, you have an onboarding program for the agent in the form of a system prompt and tool descriptions. The tool descriptions should be highly descriptive, capturing arguments, what the tool does (and doesn’t) do, its response format, and when the agent might want to use it. The system prompt needs to be similarly detailed and organized - capturing a systematic way to conceptualize and debug problems. A new hire at your company should be able to read the prompt and

- Easily understand how to decompose parts of the user’s query to understand a) What they’re asking for and b) What they’re asking for but not explicitly stating (i.e. be as helpful as you can).

- Find definitions of words they don’t know (e.g. terminology specific to your product, or terminology that might have a different nuanced definition for you than it does for other products/the rest of the world)

- Determine what knowledge or data to gather, and where exactly to gather this knowledge/data

- How to analyze the data you gathered, and answer the user’s original question

However, we should note that excessive verbosity can also have consequences in the form of instability and non-deterministic behavior in an agent. Start with sufficient detail, but experiment and see what works well for your agent - what we found works well for deliverability may not apply for all use cases.

Continuously Updated Knowledge Base

KB retrieval, which is a form of Retrieval Augmented Generation (RAG) [https://www.promptingguide.ai/techniques/rag] system, is extremely powerful. Just as chat agents like ChatGPT or Claude don’t know about real-time world events without performing a web-search, you shouldn’t expect your agent to know about the hottest new feature you released without having access to a published and up-to-date data source (i.e. a knowledge base). Without it, you’re relying on data that the model was already trained on, and knowledge hardcoded into the system prompt - which can result in the model giving you incorrect or outdated information. Without KB integration, we were finding it hard to keep up with Apollo’s platform and feature set as it evolved, but with it the agent started performing really well on a variety of questions like (”How do I set up tracking domains on Apollo?”, or “What mailbox sending limits should I be using?”. There’s another benefit to KB integration - it promotes a documentation-first culture. Anything that’s well documented is also easily accessible to users and the agent, making for a powerful way to continuously update users on features to make their use cases on Apollo easier.

What’s Next?

We want the deliverability agent to be your go-to-tool for maximizing your outbound email deliverability. To achieve this, we are planning on building integrations with sequences to help you optimize your sequence settings, email content, and A/B testing. Additionally, we are continuing to improve the agent to handle ever more complex deliverability issues.

The Future of AI-Powered Email Intelligence

Our deliverability agent tackles the complexity of email performance diagnosis and deliverability debugging. By combining systematic metrics analysis with domain expertise and intelligent tool orchestration, we have created a system that transforms hours of manual investigation into minutes of guided problem-solving. The agent demonstrates how AI can augment human expertise rather than replace it - providing a systematic analysis and knowledge retrieval that allows users to focus on strategic decisions rather than data gathering and analysis.

At Apollo we’re solving problems once thought impossible, applying cutting-edge AI to real-world challenges at scale. Our fully remote team spans the globe, uniting brilliant engineers through collaboration, creativity, and impact. We’re scaling rapidly, building new hubs, and pushing the boundaries of AI, usability and platform performance.

If you’re passionate about shaping the next chapter of intelligent systems and want to work alongside peers who thrive on solving the hardest problems, Apollo is where you belong.

🚀 Join us—explore open roles on our careers page today!